Twenty Questions On the Ethics of Robotics and Pepper Robot

Journalist Eithne Dodd interviewed Philip Graves of GWS Robotics on May 26th, 2018.

In early June, parts of the interview were used in her article for Decode Magazine on the use of Pepper Robot in assisting patients with autism and dementia.

With her kind permission, we now publish the full unedited transcript of her thought-provoking interview

1. If an AI machine has no pain receptors, is it ethical to kick it?

No. While causing pain to life-forms that can experience it is infinitely more unethical, kicking an artificially intelligent machine is still unethical.

There are two main reasons I can think of for believing this.

The first is that the wanton destructiveness of, or infliction of deliberate damage upon, useful non-destructive objects is generally unethical from the standpoint that once damaged and deformed, they are less likely to be useful to anyone. If an artificially intelligent device is no longer wanted, a more ethical way to dispose of it would be to give or sell it to someone who wants it.

This is partly an environmental argument but could also be a purely economic one from the standpoint of the efficient use of limited resources.

If the device is obsolete technology for which there is no market any more, or has broken down irreparably, then there are ethical procedures for seeing about the recycling of its parts, which deforming it with a sharp kick will do nothing to assist and may hinder.

The environmental cost of producing a sophisticated artificially intelligent device in the first place is a considerable one, and it would be recklessly irresponsible to then take actions to destroy it if it is working properly and could deliver continuing value to others.

The second reason has to do with the impulse to kick or otherwise aggressively attack an apparently intelligent object, even if this appearance is known to be an illusion, being an unethical one to act upon, because in so doing, one would be giving free rein to one's appetite to hurt or destroy apparently living entities out of anger or spite.

While there are many who would feel that even damaging obviously inanimate property, such as by kicking in a metal garage door or smashing a glass, is a recklessly destructive application of aggressive impulses (and may even be criminal if the property belongs to someone else), targeting a machine that is designed to behave in a lifelike fashion could be seen as an aggravated offence. At the very least, it sets a very bad example and could be mentally training the one carrying out this act to feel more confident about or at ease with attacking real live creatures in the future.

It is important to note, however, that the point encapsulated in this second reason does not imply that artificially intelligent machines have rights. They are still machines, but it is nonetheless demonstrably unethical with regard to the aforementioned considerations to kick or otherwise aggressively attack them.

2. If you were to kick one of the robots at your work, would disciplinary action be taken against you? If so, on what grounds would that disciplinary action be?

I would very much hope and expect that disciplinary action would be taken against me in that circumstance! In fact, I would hope and deserve to be sacked. There would be at least two grounds for this - one is that I would have caused criminal damage to my employer's property, and the other is that I would have behaved in an unacceptably aggressive fashion in the workplace, not befitting of my status as an employee.

As an ardent pacifist who has never punched or kicked a soul - not even when on the receiving end of such treatment by other boys in my schooldays, I would be astonished and shocked at myself if I were ever to find myself in this situation.

3a. Does GWS Robotics have a code of conduct for how to treat your fellow human workers?

I'm not aware of an explicit one. It's a small, family-run business, and the directors (my elder brother David and father Richard) generally make an intuitive personality assessment of prospective employees as well as assessing the fitness of their technical skills and training to do the job before offering them a role.

Personality assessment at the time of interview is to a large degree a subjective process, but the general unspoken assumption is that anyone who passes the initial screening process by the directors is presumed of 'good character' (or at least, willing and able to abide by standards that would generally be considered to constitute this while on duty in the workplace) until proven otherwise.

If a newly hired employee began to show previously unsuspected signs of problematically aggressive or bullying behaviour to other members of staff, the directors would move fairly swiftly to terminate their employment.

3b. Does it have one for AI machines? If not, do you think it is likely to develop one?

There is again no explicit code of conduct for the treatment of AI machines at GWS Robotics, but the unwritten assumption is that such devices should not be abused. Whether the individual employee understands this simply in terms of the belonging of the devices to the employer, or further takes a view that it is generally wrong to abusively treat such devices, is perhaps a moot point, provided that respectful and non-destructive standards of behaviour are maintained.

It is possible that the company may develop an explicit code in the future. This would be more likely if it grew in size to the point where it became important to codify the otherwise merely generally understood reasonable expectations of employee conduct.

4. What is your job role?

Within GWS Robotics, I am officially a copywriter and digital marketer. This entails that I write text for the pages of the company website, optimise the website for visibility in search engines, and write articles about topics of general interest in the domain of robotics for the website.

In practice, I also participate in promotional opportunities by liaising with the press and with organisations who want to arrange for a visit by our robot, for example schools; operate the robot at expos and shows where it is interacting with the public, and contribute specific ideas to the core programming team for the development of applications, behaviours and lines of speech for use by the robot in particular settings.

5. Do you help to build artificially intelligent machines?

No. GWS Robotics does not construct robot hardware. We custom-program hardware produced by much larger corporations such as Softbank Robotics, the company that owns Pepper robot. The development of robot hardware requires a particular set of skills in mechanical engineering. This is not our collective interest, which lies much more in the software (programming) side. Others produce robots; we envision creative uses for them and program them accordingly.

6. Are computers smarter than us?

That depends on one's understanding of 'smart', but, even taking it for granted that the intended meaning of 'smart' here is the American sense of 'intelligent' and not the traditional British or Irish one of 'well-dressed', I would generally say 'no' in answer to this question.

The two areas of relevance in which computers excel and already win over humans are the power to process calculations and the exact, lossless storage and recall of memory.

Already in the 1980s, the former attribute (processing power) was being demonstrated effectively by chess computers and chess software that was difficult for players of ordinary club-level abilities to defeat. And by 1997, a chess computer had managed to defeat a reigning chess world champion in Garry Kasparov. Since 1997, computer processing power has increased many times, and with it the advantage of computers over humans in terms of raw mathematical processing power.

Similarly, the memory and quickly retrievable data storage capacities of computers have vastly increased since 1997, when a typical hard drive on a well-specified new PC would fit 2 Gb of data, compared with 2 Tb 20 years later, an increase of 1000 times in two decades alone.

Humans are no match for advanced computers in these attributes because computers are precision-built from solid materials continuously powered by an even stream of electricity, and are designed to detect, process and store nothing but digital binary code, whereas humans are organic life-forms made from cells that depend on a regular supply of a host of vital nutrients, have evolved to put their own needs for survival and companionship before mathematical operations, and are designed to detect, process and store analogue signals from the complex physical world. In short, humans are equipped to live and to experience their environments, computers merely to process. Computers are, after all, machines.

But our deficiencies in comparison to computers in the specific areas outlined above do not make us less intelligent than computers. The stem of the word 'intelligent' is the Latin verb intellegere, which means 'to understand'. Computers can process a lot of binary code, and quickly deliver informative appropriate outputs reflecting that processing when they have been programmed to deliver processing for a particular purpose. But they understand absolutely nothing, because they are not conscious entities, and have no brain, nervous system, feelings or soul.

Any outputs from computers that might appear to us to show intelligence are merely the product of how they were programmed by intelligent human programmers. And the same is true of robots. It doesn't really matter whether we dress them in desktop cases or in robotic bodies - they are still digital processors without consciousness. In the final analysis, they are programmable arrays of logic gates and nothing more.

Humans have the quality of true consciousness that gives them vastly superior intellectual potential to any machine. We are able to interpret our environment based on continuous experience and learning at very subtle levels, conscious and subconscious, and to understand from the predictably replicable patterns in the behaviour of the external world that it is real and that it interacts with us. We also understand that we have limited lifespan and fragile bodies and health, and can draw behavioural and moral lessons from this. Not all of us are able to solve complex equations that to a well-programmed computer would be easy, but that again is not a deficiency in true intelligence, which lies more in the wisdom of intelligent adaptation to reality in all its facets, personal and material. Some of us can even theorise and philosophise on open-ended questions of the nature, meaning or value of life and existence in ways that would be completely incomprehensible to a computer.

7. How do we know artificial intelligence from human (organic?) intelligence?

A fair test of this would require blindness on the part of the beholder as to whether he or she was interacting with a human or a computer. To achieve this blindness, the interaction would have to be mediated by digital or electronic means that prevented the obvious signatures of human behaviour such as the ebb and flow of a continuous human voice or the appearance of a real human face from giving the game away.

If you ask a series of questions to a hypothetical digital interface that may be responded to by either an unseen human or a computer at a distance, it should ordinarily be possible for you to distinguish the human from the computer after a while, as a result of the computer delivering obviously repetitive or stock answers. However, it is worth remembering that artificial intelligence is designed to give the illusion of true intelligence and, if programmed by a thoughtful human programmer with a thorough repertoire of answers in response to all manner of possible questions, an artificially intelligent device accessed blind through a digital interface could deliver a fairly convincing illusion of being intelligent. This is ultimately because the original programmer's intelligence is being experienced by the one receiving the answers from the artificially intelligent device. It may then take considerable probing to shatter the illusion by exposing unnatural patterns of responses.

Fortunately, in most situations where we interact with either people or A.I. machines, we are exposed to plenty of other cues by which to differentiate their nature. In social situations where interactions are conducted in situ rather than through electronic means of communication, for example, there are numerous additional signals by which people communicate, including movements, facial expression, patterns of eye contact, variations in tone and amplitude of voice, and energy or vibrations, that we may register at a subconscious level, and that allow us to understand a lot about the other person and what he or she is thinking or feeling on various levels.

Social robots are nowadays being programmed to read some of these cues and respond to them, but this is not an organic process, unlike the one that humans experience, so at best it can give a fairly crude set of data that feed into the interaction with the human onlooker.

8. Do you think we will always be able to tell the difference between the two kinds of intelligence?

As the self-learning capabilities built into artificially intelligent devices become ever more sophisticated, we can expect them to deliver ever more sophisticated illusions of intelligence that could trick onlookers blinded as to their true nature into imagining them to possess a form of consciousness. However, the subtleties of real human communication are very sophisticated, and the volatile sentimental and emotional nature of organic human beings as reflected in their attachments to each other and to pets and other life-forms would be extremely difficult for any programmed artificially intelligent device to pull off entirely persuasively.

Actors are trained in effect to lie, from a strictly literal point of view, but can do so in a persuasive way because they are humans playing the roles of other humans using the human form and while interacting in real time with those around them. Even then, we can tell the difference between people acting and being themselves most of the time.

Computers and robots don't have the resources by which to act. They simply process inputs and respond with outputs, exactly as they have been programmed to do by their human programmers.

9. Do you work with robot Pepper? What kind of intelligence and intelligence level does Pepper have?

Yes, I do. Pepper I would assess to be a stepping stone in the evolution of artificially intelligent social robots. Its level of artificial intelligence is moderate in absolute terms and a lot more limited than we can expect to be exhibited by state-of-the-art social robots in another thirty to fifty years from now, but enough to make for some entertaining experiences for those who seek to interact with it.

Pepper is able to use visual sensors and a microphone to draw information about its immediate environment, and is programmed to respond in a fairly natural-looking manner, for example by turning to face the source of the loudest human voice in its vicinity, or following the human standing closest to it within its field of vision with its eyes. It is programmed with voice recognition and language interpretation capabilities, but these are relatively rudimentary out of the box, and custom programming is needed to develop them. It is also programmed to detect some signs of basic emotions such as sadness and anger and to adjust its behavioural outputs accordingly.

People tend to find interacting with Pepper to be a lot of fun, but it is very obviously a robot, and no-one interacting with it could reasonably be expected to mistake it for a human. It is however possible for human operators observing Pepper's interactions with a human to remotely type in speech that directly and appropriately responds to the human and is spoken by Pepper. This kind of manual intervention can provide an entertaining illusion, much as a magic trick would, if the human interacting with the robot is not aware of the presence of the operator. It can be a useful standby at shows and exhibitions where the background noise is too great for Pepper to hear and respond to what is being said to it effectively. But it is not true artificial intelligence of course.

10. Should one refer to Pepper as 'he' or 'she'? Is Pepper male or female?

Overall, Pepper has somewhat androgynous features, with a head that looks more obviously robotic than possessed of a gender, a relatively flat upper body that might more often be assumed a hallmark of a male than a female, but a hip-to-waist area ratio that usually creates a more feminine impression.

The men in our office used to commonly refer to Pepper as 'he' and 'him', but the external consensus seems to be that, as one of our Twitter followers insisted, 'Pepper is a girl'.

Ultimately, Pepper is a machine, and therefore a dispassionate scientist would probably prefer 'it', a practice to which I am adhering for the purposes of this interview. But since the whole idea of social robotics is to program robots to interact with humans in a way that creates a pleasant social experience, and people are prone to divide up their social worlds into genders, it is understandable that most prefer to use either the male or the female pronouns, depending on their perceptions.

11. Can one observe Pepper or other robots you work with getting smarter / accumulating knowledge?

One can observe Pepper tracking its environment, and it can permanently learn the layout of the location where it is kept by moving around it and detecting obstacles, then committing a map of the environment to memory. However, its self-learning abilities from interactions with humans are relatively limited, and by and large to improve either the apparent intelligence of its behaviour or its knowledge base requires improvements in custom programming.

12. What characteristics does Pepper have? Does he have them or does he display them? How do you know the difference?

I think I covered many of Pepper's behavioural characteristics in outline under question 9. above.

I think it is reasonable to say that even a machine can have characteristics. A characteristic is merely a defining feature. You know the difference between a possessed characteristic and a displayed characteristic according to whether or not there are outwardly manifest signs of it. Some characteristics of a robot will be manifestly displayed through its behaviour, while others may be highly technical ones that are outwardly invisible to the general public or the end-user but known to the hardware and software developers that have worked on the device. I would contend that a displayed characteristic is still a characteristic, even if it is designed to convey an illusion of autonomous intelligence that is not really there.

It may be worth mentioning Pepper's mechanical characteristics at this juncture. Pepper contains many joints. It has fingers with multiple separately angled imitation finger bones, so it can curl up its hands and uncurl them again in quite a human-like manner. It can tilt its waist at different angles, and is especially good at moving its arms and neck. However, it does not have feet, and when it 'decides' to move, it simply speeds off on its wheeled base, either until it detects a risk of collision, or until it 'decides' to stop again for some other reason. Its motors allow it to move in four different directions.

13. Can Pepper display a social intelligence?

Yes, but only within limited parameters. It can for instance flash its eyes in different colours to represent emotions, and respond to the proximity of people in ways that appear reasonably natural, for example by avoiding hazardous collisions but accepting gentle contact by people who are not behaving aggressively. However, if you compared a robot like Pepper with a real, live pet like a dog or cat in comparable situations, the social intelligence of the real pet would be observed to be vastly superior to that of the robot.

14. Do you think we will ever live in a world where robots can do anything a human can do?

If by 'anything' is meant 'at least one thing', then we already got there in the earliest days of the development of robotics. But if by 'anything' is meant 'everything', then no, we will never live in such a world. There will always be aspects of human experience that depend on our nature as organic life-forms.

15. Do you think the Turing test is still a reliable way to determine the intelligence level of AI?

I referred to a hypothetical test akin to the traditional Turing test in my response to 7. above. I think that generically this kind of blind user experience test is an important kind of test for determining the subjectively perceived sophistication of artificial intelligence. But the devil is in the detail, and a great many tests of this character are likely to fail to differentiate as effectively as a human would in a natural situation.

If the Turing test requires a particular, pre-scripted set of questions devised by an assessor to be asked, then it is only as good or as useful as that set of questions, and should be relatively easy to 'game' by a programming team with the time and budget to devise answers to every conceivable common human question.

If, however, the blind user charged with the task of trying to determine which of the two devices being interacted with is operated by a live human and which is not is permitted to ask a different set of questions to each, and to build from one question to the next in an entirely natural way depending on the previous answers given, or to jump on a whim to a different kind of question altogether, at will, it should be more likely that the A. I. device will exhibit unnatural behaviour in its patterns of response than that the human operator will - provided that the human operator is not obtuse or unusually socially stilted in manners, which might tend to a false positive impression that he / she is in fact an A. I. device.

So overall, Turing-type tests are a generically useful methodology, but a huge amount of careful thought and planning needs to go into the detail of their design, execution and interpretation in order for the data they generate to be reliably meaningful in the ways that it is supposed to be. Much the same is arguably true of virtually any experiment in psychology.

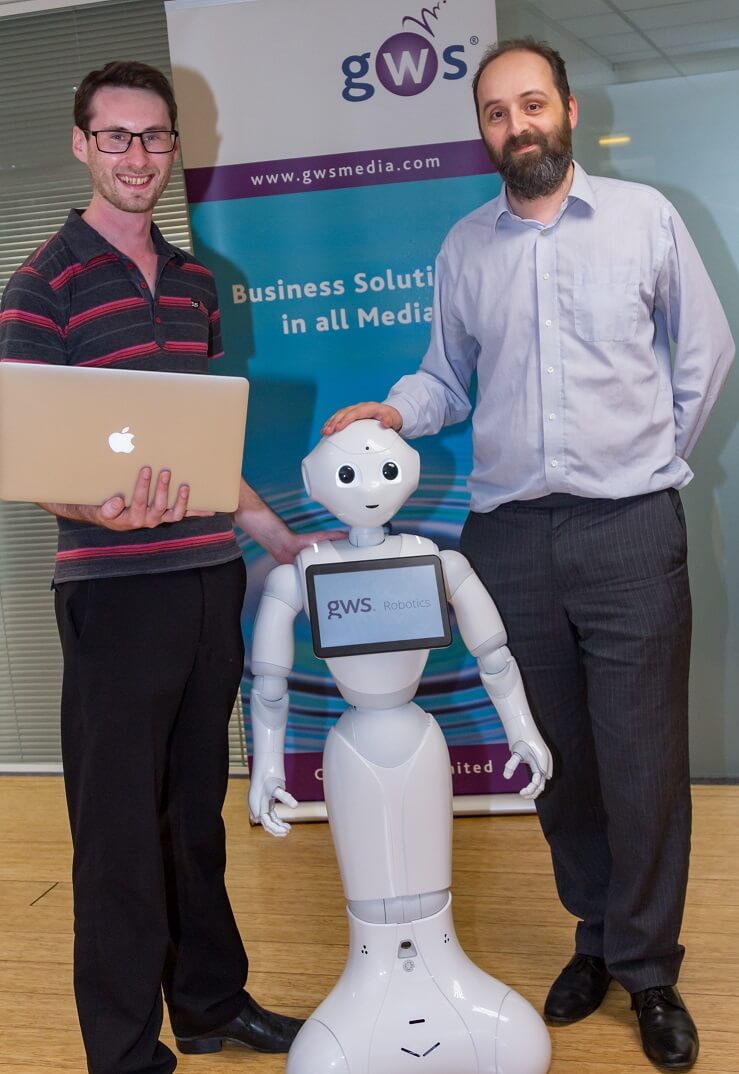

L-R: former GWS developer Tom Bellew; Pepper Robot; GWS director David Graves

Pictured in August 2016

16. If a robot such as Pepper were destroyed, would that result in an emotional loss for you or any of the people that Pepper works with?

That would probably depend on the manner and spirit of the act of destruction. We actually had this happen once already in a way, as did all other registered owners of Pepper, about a year ago. Softbank Robotics announced a recall for a hardware upgrade of all Pepper units. In practice, this entailed the one we had being collected by a courier and returned to Softbank Robotics for recycling and disposal, while an entirely new unit was sent in its place just days after it was collected. So the Pepper we have now is not the same Pepper we began with. Physically, it's a completely different object. It just looks roughly the same, and has most of the same characteristics. It's certainly faster to respond and to move than the original Pepper was, so it's a clear upgrade, but it is not the same device.

I don't recall anyone in the office getting at all upset over the loss of the original Pepper and its replacement with a new one. It would be a bit like getting upset by a familiar computer or telephone giving up working and needing to be replaced. People can get attached to electronic devices that they interact with a lot, just as children can get attached to their toys in which they invest imaginary personalities, or grown-ups can get attached to their furniture, houses, or objets d'art. But replacing one machine with another of the same basic design that looks and behaves in almost exactly the same way does not seem to upset people so much as the loss of a belonging felt to be unique (at least to their life experience).

People who work with robots have to be logical in their approach to them in order to get good results from them, and that same quality of logic probably tends to an unsentimental manner when faced with the replacement of one robot by another.

If, however, a robot such as our Pepper were destroyed by malice or violence, the reactions of our team would conceivably be quite different because of the spirit of the act of destruction being a malign one, whereas with the Pepper upgrade programme this clearly was not the case.

Ultimately, it is reasonable for people to feel emotional loss when they are abandoned by loved ones or when loved ones die, but love is best reserved for truly sentient beings such as humans and other animals, which benefit from and arguably deserve it.

Dispassionately viewed, attachment to robots, cuddly toys and other inanimate objects invested with personalities by their owners and keepers is purely a function of projection.

17. Do you believe people have good reason to fear AI machines going rogue?

Yes, to the degree that AI machines can be developed and programmed by irresponsibly negligent or criminally rogue humans.

In the absence of sufficient regulatory oversight, robots could be designed or programmed as killing machines. It is really of paramount importance that lawmakers legislate to prevent these scenarios from becoming commonplace in the future.

The related risk of robots being negligently allowed to turn rogue by being given too free a rein to learn and act on destructive impulses (albeit ones ultimately driven by their programming) is one of the reasons why I'm an outspoken opponent of the idea promoted by a working committee of the E.U. Parliament in 2016-17 that robots should be granted electronic personhood.

The establishment in law of any kind of legal get-out clause that could exonerate the makers and programmers of robots from responsibility for the damages they may cause to humans by shifting the responsibility to the robot itself on the pretext that it is an autonomous person would be a colossal mistake.

By requiring the manufacturers and programmers of robots to build in safeguards that prevent them from causing harm to others, and making these parties legally liable for any harm caused to a human by a robot they have worked on, lawmakers can play a valuable role in guiding the ethical development of AI far into the future.

Unfortunately, the temptation of some governments and terrorist groups around the world to develop sophisticated lethal autonomous weapons capable of using artificial intelligence to select and launch fire at targets is a danger that looms large across the future and, if allowed to prevail, will undoubtedly blight the wider public image of robotics, which in itself is an ethically neutral bank of technical knowledge that can equally be used for good or evil. It is incumbent on us to lobby politicians at all levels of government to help to ensure that robotics and A.I. are only used for good.

18. Do you believe it would be ethical for an AI machine to pose as a human?

No, if the intention was truly to deceive people into believing that the AI machine was human, that would be profoundly unethical, just as it is unethical for one human to pose as another human in order to deceive.

This does not, however, mean that it is unethical to develop robots with a physical likeness to humans, as has already been done to a remarkable degree by Hanson Robotics with its creations such as Sophia. Context is all-important. Robots like Sophia are extremely impressive exponents of state-of-the-art mechanical and material robotics design, and artificial intelligence. But they are used to entertain and not to deceive, and there is nothing wrong with entertaining in a good spirit.

19. Is it right to turn off forever, or otherwise destroy, an artificially intelligent machine when it can no longer function as it was designed to?

It is neither wrong nor necessarily the only option. It is not wrong because it is still a machine and not a life-form. We should be much more concerned about animals held in captivity for scientific experiments and product research being involuntarily killed, or 'euthanised', than we should about broken robots being dismantled and recycled. Such animals may have reached the point in their lives when they can no longer provide useful data to scientists, but that doesn't make it right to kill them - they should be set free or retired to a wildlife park that will take reasonable care of them.

It is not necessarily the only option because the machine could be reworked with new parts, just as old cars with worn-out parts can usually be got on the road again by the selective replacement of worn-out parts, as an alternative to being consigned to the scrap metal yard.

20. Do you think it is right to turn off forever or otherwise destroy an artificially intelligent machine when it has outlived its usefulness to its owner?

I think I covered this largely in my answer to question 1. above. While there is nothing particularly right about this, there is nothing inherently wrong with turning off an artificially intelligent machine either. What is more problematic is the potential waste of materials and the resources that went into producing them. If the machine is still useful, it would preferably be passed on to someone else who would gain value from using it. If not, then its parts should be recycled.